The Equivalence of Weighted Kappa and the Intraclass Correlation Coefficient as Measures of Reliability - Joseph L. Fleiss, Jacob Cohen, 1973

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

Intra and Interobserver Reliability and Agreement of Semiquantitative Vertebral Fracture Assessment on Chest Computed Tomography | PLOS ONE

Inter-observer agreement and reliability assessment for observational studies of clinical work - ScienceDirect

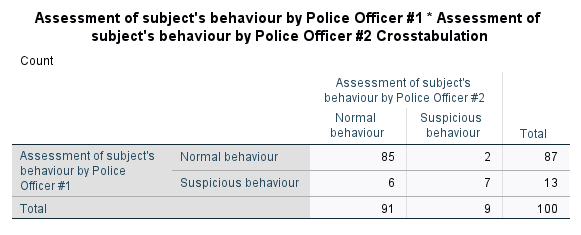

Cohen's kappa in SPSS Statistics - Procedure, output and interpretation of the output using a relevant example | Laerd Statistics